Switches are not only used in LAN networks; they are also used extensively in wide area networks (WANs). In an Ethernet switching environment, the switch utilizes Carrier Sense Multiple Access with Collision Detection (CSMA/CD). The switch or host sends out a packet and detects if a collision occurs. If there is a collision, the sender waits a random amount of time and then retransmits the packet. If the host does not detect a collision, it sends out the next packet. You may think that if the switch or host is set to full−duplex, there will be no collision—that is correct, but the host still waits between sending packets.

In a Token Ring switching environment, a token is passed from one port to the next. The host must have possession of the token to transmit. If the token is already in use, the host passes the token on and waits for it to come around again. All stations on the network must wait for an available token. An active monitor, which could be any station on the segment, performs a ring maintenance function and generates a new token if the existing token is lost or corrupted.

As you can see, both Token Ring and Ethernet switching require the node to wait. The node must wait either for the token or for the frame to reach the other nodes. This is not the most efficient utilization of bandwidth. In a LAN environment, this inefficiency is not a major concern; in a WAN, it becomes unacceptable. Can you imagine if your very expensive T1 link could be used only half the time? To overcome this problem, WAN links utilize serial transmission.Serial transmission sends the electric signal (bits) down the wire one after another. It does not wait for one frame to reach the other end before transmitting the next frame. To identify the beginning and the end of the frame, a timing mechanism is used. The timing can be either synchronous or asynchronous. Synchronous signals utilize an identical clock rate, and the clocks are set to a reference clock. Asynchronous signals do not require a common clock; the timing signals come from special characters in the transmission stream. Asynchronous serial transmissions put a start bit and a stop bit between each character (usually 1 byte). This is an eight−to−two ratio of data to overhead, which is very expensive in a WAN link. Synchronous serial transmissions do not have such high overhead, because they do not require the special characters; they also have a larger payload. Are synchronous serial transmissions the perfect WAN transmission method? No; the problem lies in how to synchronize equipment miles apart. Synchronous serial transmission is only suitable for distances where the time required for data to travel the link does not distort the synchronization. So, first we said that serial is the way to go, and now we’ve said that serial has either high overhead or cannot travel a long distance. What do we use? Well, we use both, and cheat a little bit. We use synchronous serial transmission for a short distance and then use asynchronous for the remaining, long distance. We cheat by putting multiple characters in each frame and limiting the overhead. When a frame leaves a host and reaches a router, the router uses synchronous serial transmission to pass the frame on to a WAN transmission device. The WAN device puts multiple characters into each WAN frame and sends it out. To minimize the variation of time between when the frames leave the host and when they reach the end of the link, each frame is divided and put into a slot in the WAN frame. This way, the frame does not have to wait for the transmission of other frames before it is sent. (Remember, this process is designed to minimize wait time.) If there is no traffic to be carried in a slot, that slot is wasted. Figure 1 shows a diagram of a packet moving from LAN nodes to the router and the WAN device.

Figure 1: A packet’s journey from a host to a WAN device. The WAN transmission is continuous and does not have to wait for acknowledgement or permission.

Figure 1: A packet’s journey from a host to a WAN device. The WAN transmission is continuous and does not have to wait for acknowledgement or permission.Let’s take a look at how this process would work in a T1 line. T1 has 24 slots in each frame; each slot is 8 bits, and there is 1 framing bit:

24 slots x 8 bits + 1 framing bit = 193 bits

T1 frames are transmitted 8,000 frames per second, or one frame every 125 microseconds:

193 bits x 8,000 = 1,544,000 bits per second (bps)

When you have a higher bandwidth, the frame is bigger and contains more slots (for example, E1 has 32 slots). As you can see, this is a great increase in the effective use of the bandwidth.

Another asynchronous serial transmission method is Asynchronous Transfer Mode (ATM). ATM is a cell−based switching technology. It has a fixed size of 53 octets: 5 octets of overhead and 48 octets of payload. Bandwidth in ATM is available on demand. It is even more efficient relative to the serial transmission method because it does not have to wait for assigned slots in the frame. One Ethernet frame can consist of multiple consecutive cells. ATM also enables Quality of Service (QoS). Cells can be assigned different levels of priority. If there is any point of congestion, cells with higher priority will have preference to the bandwidth. ATM is the most widely used WAN serial transmission method.

WAN Transmission Media

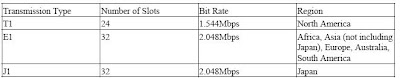

The physical transmission media that carry the signals in WAN are divided into two kinds: narrowband and broadband. A narrowband transmission consists of a single channel carried by a single medium. A broadband transmission consists of multiple channels in different frequencies carried on a single medium. The most common narrowband transmission types are T1, E1, and J1. See Table 1 for the differences among the transmission types and where each is used. The time slots specify how much bandwidth (bit rate) the narrowband transmissions have.

Table 1: Narrowband transmission types

Narrowband is most commonly used by businesses as their WAN medium because of its low cost. If more bandwidth is needed than narrowband can provide, most businesses use multiple narrowband connections. The capability of broadband to carry multiple signals enables it to have a higher transmission speed. Table 2

Narrowband is most commonly used by businesses as their WAN medium because of its low cost. If more bandwidth is needed than narrowband can provide, most businesses use multiple narrowband connections. The capability of broadband to carry multiple signals enables it to have a higher transmission speed. Table 2displays the various broadband transmissions, which require more expensive and specialized transmitters and

receivers.Table 2: The different broadband transmission types and their bandwidth.

Digital signal 2 (DS2), E2, E3, and DS3 describe digital transmission across copper or fiber cables. OC/STS resides almost exclusively on fiber−optic cables. The OC designator specifies an optical transmission, whereas the STS designator specifies the characteristics of the transmission (except the optical interface). There are two types of fiber−optic media:

Digital signal 2 (DS2), E2, E3, and DS3 describe digital transmission across copper or fiber cables. OC/STS resides almost exclusively on fiber−optic cables. The OC designator specifies an optical transmission, whereas the STS designator specifies the characteristics of the transmission (except the optical interface). There are two types of fiber−optic media:Single−mode fiber—Has a core of 8.3 microns and a cladding of 125 microns. A single light wave powered by a laser is used to generate the transmission. Single−mode can be used for distances up to 45 kilometers; it has no known speed limitation. Figure 2 shows an example of a single−mode fiber.

Figure 2: Single mode fiber.

Multimode fiber—Has a core of 62.5 microns and a cladding of 125 microns. Multiple light waves powered by a light−emitting diode (LED) are used to power the transmission. Multimode has a distance limit of two kilometers; it has a maximum data transfer rate of 155Mbps in WAN applications. (It has recently been approved for use for Gigabit Ethernet.) Figure 3 shows an example of a multimode fiber. The core and cladding boundary work as a mirror to reflect the light waves down the fiber.

Figure 3: Multimode fiber.

Synchronous Transport Signal (STS)

Synchronous transport signal (STS) is the basic building block of the Synchronous Optical Network (SONET). It defines the framing structure of the signal. It consist of two parts: STS overhead and STS payload. In STS−1, the frame is 9 rows of 90 octets. Each row has 3 octets of overhead and 87 octets of payload, resulting in 6,489 bits per frame. A frame occurs every 125 microseconds, yielding 51.84Mbps.

STS−n is an interleaving of multiple (n) STS−1s. The size of the payload and the overhead are multiplied by n. Figure 4 displays an STS diagram.

Figure 4: The STS−1 framing and STS−n framing. The overhead and payload are proportionate to the n value, with the STS−1 frame as the base.

Figure 4: The STS−1 framing and STS−n framing. The overhead and payload are proportionate to the n value, with the STS−1 frame as the base.You may wonder why we’re talking about synchronous transmission when we said it is only used over short distances. Where did the asynchronous transmission go? Well, the asynchronous traffic is encapsulated in theSTS payload. The asynchronous serial transmission eliminates the need for the synchronization of the end transmitting equipment. In SONET, most WAN links are a point−to−point connection utilizing light as the signaling source. The time required for the signal to travel the link does not distort the synchronization. The OC−n signal itself is used for the synchronization between equipment. This combination of asynchronous and synchronous serial transmission enables signals to reach across long distances with minimal overhead.

This separation of the reachability information (in the Cisco Express Forwarding table) and the forwarding information (in the adjacency table), provides a number of benefits:

This separation of the reachability information (in the Cisco Express Forwarding table) and the forwarding information (in the adjacency table), provides a number of benefits:

In Fast Switching, the reachability information is indicated by the existence of a node on the binary tree forthe destination of the packet. The MAC header and outbound interface for each destination are stored as part

In Fast Switching, the reachability information is indicated by the existence of a node on the binary tree forthe destination of the packet. The MAC header and outbound interface for each destination are stored as part